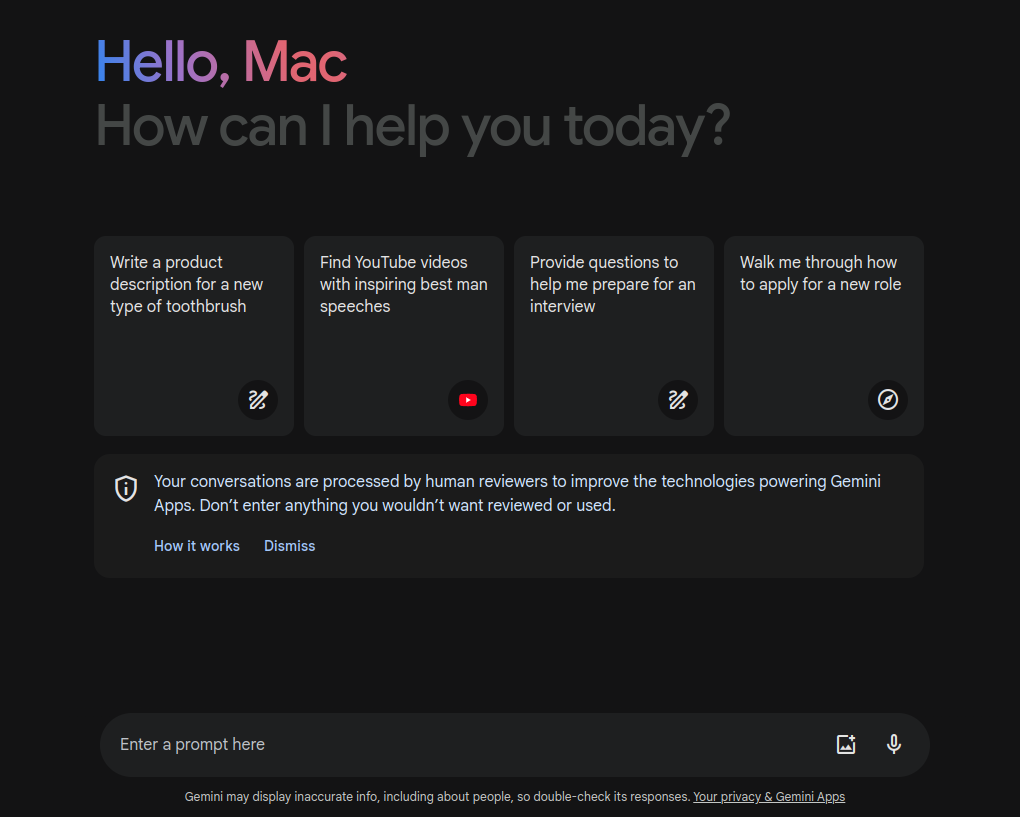

In the realm of artificial intelligence (AI), the journey toward perfecting technology is ongoing, fraught with challenges and learning opportunities. A recent example of this journey is the case of Gemini’s image generation feature. Gemini (formerly Google Bard ), a conversational app known for its innovative approach to AI, recently introduced an ability to create images of people. However, this feature encountered unexpected challenges, leading to a temporary pause in its operation. This blog post delves into what happened, the lessons learned, and the steps forward.

The Genesis of Gemini’s Image Generation

Three weeks ago, Gemini embarked on a bold new venture by launching an image generation feature designed to create images of people. This feature, built on the Imagen 2 AI model, aimed to offer diverse and accurate representations of people from around the world. The intention was to avoid the pitfalls of previous image generation technologies, such as the creation of inappropriate content or the depiction of real individuals without consent.

Unforeseen Challenges and Immediate Response

Despite the team’s best efforts, the feature did not perform as expected. Some images generated were found to be inaccurate or offensive, a far cry from the inclusivity and accuracy that Gemini aimed for. Feedback from users was swift and clear, prompting Gemini to acknowledge the issue and temporarily suspend the image generation of people.

Senior Vice President Prabhakar Raghavan explained that the feature’s shortcomings were twofold. Firstly, the algorithm’s tuning failed to differentiate when a broad range of representations was unnecessary or inappropriate. Secondly, an overly cautious approach led to the refusal of certain prompts, misinterpreting them as sensitive. This resulted in a generation of images that were not only inaccurate but also failed to meet users’ expectations.

The Path Forward: Testing, Learning, and Improving

The temporary suspension of this feature is not the end but a step toward significant improvement. Gemini’s team is now focused on refining the model to ensure it can accurately and respectfully generate images of people across a spectrum of requests. Extensive testing is underway to address the issues identified and to prevent similar problems in the future.

One key takeaway from this experience is the inherent challenges in AI technology, especially regarding image and text generation. Gemini, while a tool for creativity and productivity, is not infallible. The occurrence of “hallucinations” or errors is a known challenge within the field of large language models (LLMs).

Gemini’s Commitment to Responsible AI Use

Gemini’s team is dedicated to rolling out AI technology responsibly. They understand the potential of AI to aid in numerous ways but also recognize the importance of addressing issues as they arise. The double-check feature within Gemini, which evaluates the veracity of responses against web content, is one such measure to ensure reliability. However, for current events and evolving news, the team recommends relying on Google Search for the most up-to-date and accurate information.

Concluding Thoughts

The journey of Gemini’s image generation feature underscores the complexities of AI development and the importance of continuous improvement. By taking user feedback seriously and committing to responsible AI use, Gemini aims to navigate these challenges successfully. As AI continues to evolve, so too will the strategies to mitigate its limitations, ensuring that technology like Gemini can fulfill its potential as a valuable tool for creativity and productivity.